A curious robot is poised to rapidly expand reef research

WHOI scientists with the Coral Catalyst Team are leveraging a new, artificially intelligent robot to automate coral reef health assessments

This article printed in Oceanus Summer 2022

This article printed in Oceanus Summer 2022

Estimated reading time: 4 minutes

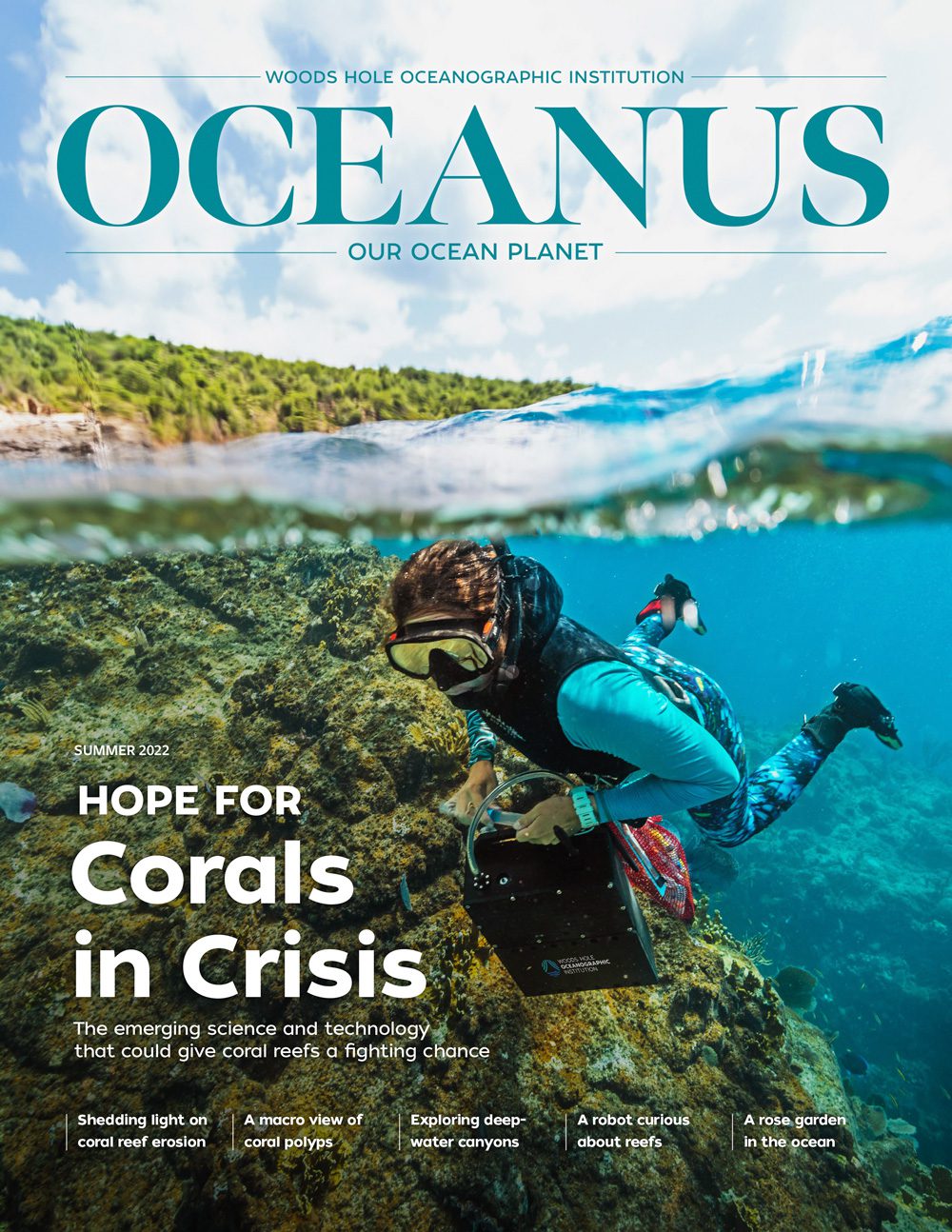

The latest iteration of a portable WHOI robot is learning to autonomously observe, track, and listen to reef animals using artificial intelligence, a promising milestone for scientists trying to automate biodiversity and health assessments on coral reefs.

In October, 2021, scientists with WHOI's Coral Catalyst Team and the Autonomous Robotics and Perception Lab (WARPLab) met in the U.S. Virgin Islands to test new animal tracking and reef-scanning algorithms powering the bot. The trip marked the first reef-based field test for the marine vehicle, known as the WARP-AUV (pronounced "warp ay-yoo-vee"), beginning a four-year project to build the robot's capacity to think on its own. For scientists trying to get a handle on the health of the world's declining reefs, the robot presents a multi-threat tool that will soon hear, see, and analyze changes befalling these marine ecosystems.

"The current ways of coral research don't scale very well, because you'd still have to have all the data collected and annotated [manually]," says WHOI computer scientist Yogi Girdhar, who's been leading the "Curious Robot" project for the last four years. "This isn't feasible in the context of long-term, large-scale exploration and monitoring of reefs."

Reef health surveys typically run for weeks (sometimes months), often for just one location. By the time scientific divers reach a neighboring reef, it may have already undergone massive change from things like hurricanes, overfishing, or disease.

"Every reef gets exposed to a particular range of temperatures or disturbances, and those ecosystems evolve under each set of constraints," says Nadége Aoki, an MIT-WHOI PhD student in WHOI's Sensory Ecology and Bioacoustics Lab.

With a more omnipresent fleet of reef robots, Girdhar notes, marine scientists will be able to collect data across a bigger space, in a fraction of the time. That could free them up to focus more on developing solutions for things like coral bleaching, diseases, or waning biodiversity.

"We don't want to replace scientists, we just want to help them be as efficient as possible," says MIT-WHOI PhD student and computer scientist Stewart Jamieson. "Our robot powers the science team to be much more productive and to understand larger and more complex areas."

Jamieson joins Girdhar's team of seven PhD students and postdocs who regularly program the WARP-AUV to automatically learn the marine world around it when it hits the water. In the lab, they feed the robot's brain with thousands of images of marine life to give it a baseline understanding of the habitat. Other photos, taken by WHOI's deep-water vehicle Mesobot, have helped the machine discern fish and even semi-transparent jellyfish from dim backgrounds of bubbles and blues-sometimes with startling accuracy.

"The robot can teach itself to recognize so many different things," says Northeastern University guest student and engineer Nathan McGuire. "When it sees something new it can identify it," asking researchers if it should pay more attention to it or not.

(In order of appearance) Computer scientist Yogi Girdhar and MIT-WHOI engineer student Jess Todd supervise the WARP-AUV during tests on Tektite Reef in St. John. There, the vehicle glides around the corals to find animals to track. Back ashore, the team assesses footage from WARP-AUV stereo and 360° cameras, after the robot autonomously followed a barracuda using software developed by MIT-WHOI Joint Program student Levi Cai. (Video by Dan Mele, Edited by Daniel Hentz, © Woods Hole Oceanographic Institution)

In one of the robot’s first field tests, topside supervisors used their laptops to click on reef creatures seen through a live feed tether to WARP-AUV’s stereo camera system. Eventually, the robot locked on to a barracuda, and quietly tailed the predator for 10-minutes, before bumping into its fin—a lesson in etiquette that would later be reflected in the vehicle's code.

In subsequent dives, researchers trained WARP-AUV using divers to mimic the erratic movements of evading fish. Over time, they hope these exercises will improve the vehicle’s stealth.

“It’s a whole different perspective following a single fish,” says Girdhar. “You start to see things like the creature’s ‘apartment complex’ and its 'annoying neighbor,' the damselfish.”

For Girdhar’s Coral Catalyst teammates, like sensory biologist Aran Mooney, that level of behavioral data will aid reef scientists in understanding differences in animal communities of thriving or dying habitats. Using WARP-AUV’s other functions, like its four recording hydrophones, Mooney can also compare changes in reef sounds as the robot zooms from one location to another. Differences in sounds between healthy and sick reefs may later become another metric for surveying reef health.

The robot’s axial and forward propellers and light, 50-pound design allow it to stop on a dime, sometimes freezing mid-water to passively observe or actively count the traffic around it. Such maneuverability also means it can get within inches of coral polyps to scan for signs of illnesses, like the fast-spreading Stony Coral Tissue Loss Disease.

“There are some key parts of the reef—biodiversity hubs—that act like “Main Street” or community centers,” says Mooney. “When they decline the whole reef declines, so we need to understand them before they’re gone."

In the coming years, WARPLab will work closely with other Catalyst members to tailor the WARP-AUV’s directives to their individual project needs and then the needs of other global research endeavors. For now, its ability to observe the ocean’s most complex environment so closely and with such a delicate touch is, as Girdhar alludes, nothing short of a breakthrough.

“If we can make a robot work well in a coral environment, we can make it work anywhere in the ocean.”

Funding for the Curious Robot project and the WARP-AUV come from NSF-NRI Grant #1734400 and WHOI's Coral Catalyst Program