Sea Ahead

Sea Ahead

The game-changing ocean

technologies that will transform our

ability to understand—and manage

—Earth’s last great frontier

By Evan Lubofsky | July 27, 2020

Sea Ahead

The game-changing ocean

technologies that will transform

our ability to understand

—and manage—Earth’s

last great frontier

By Evan Lubofsky | July 27, 2020

Illustration by Natalie Renier, WHOI Creative, © Woods Hole Oceanographic Institution

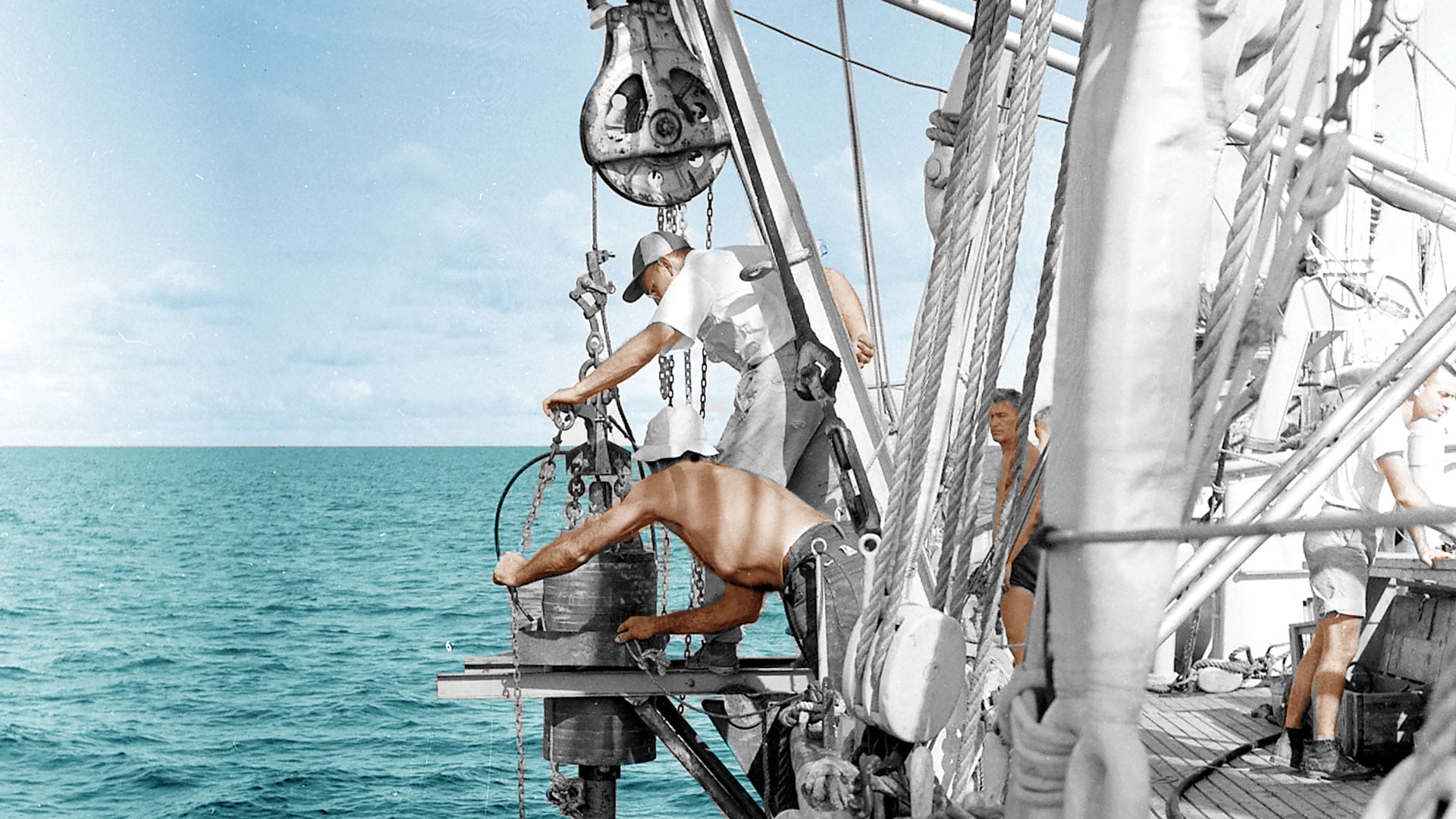

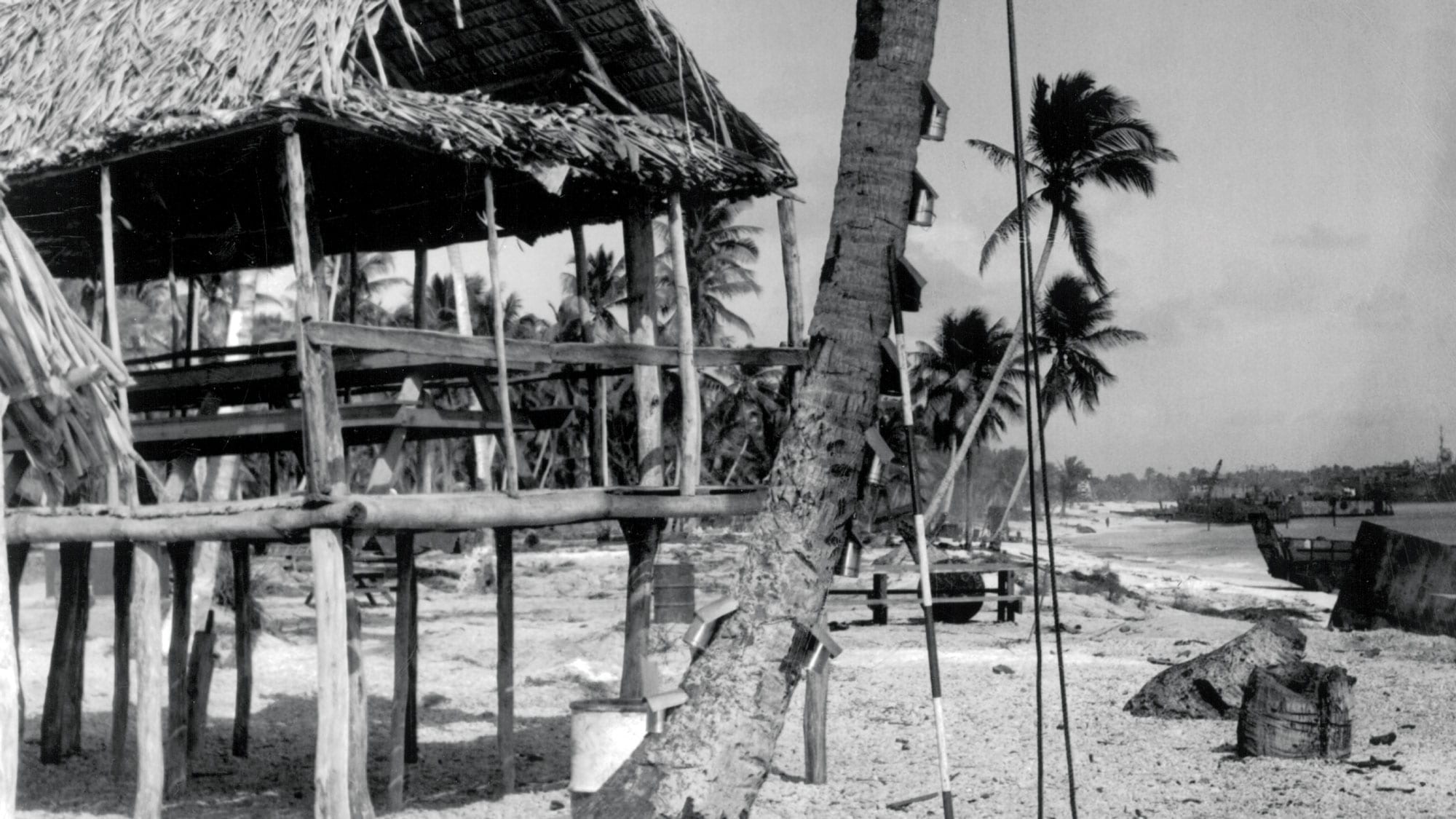

The palm tree was a peculiar sight. In many respects, it was identical to countless other coconut palms collaring the turquoise lagoon. But this one stood out: Empty food cans were nailed up along its curved stem at three different, yet evenly-spaced, heights. Like a try-your-luck beanbag game at a carnival.

But on that hot July afternoon in 1946, there was no time for fun. The first in a series of nuclear bomb detonations was hours away from dropping on Bikini Atoll—a low-lying slice of paradise in the Marshall Islands—during the post-World War II weapons testing campaign known as Operation Crossroads.

WHOI scientists were standing by. They had come to Bikini to learn more about atomic explosions and the ocean—from above and below the surface.

They were particularly interested in studying the height of waves generated by the blasts. But there was one small problem: wave-height sensors and tide gauges didn’t exist yet.

So, WHOI engineer Allyn Vine (for which the famed submersible Alvin was named) had an idea to nail empty bean cans to palm trees on Bikini and surrounding islands. Those cans would act as tide gauges by trapping seawater and sediment deposited by the basal surge of the explosion.

An ocean tech revolution

Bean cans may have been a crude-but-ingenious answer to a science problem in 1946, but today they are emblematic of the tight partnership shared between ocean scientists and engineers.

Monitoring instruments—and ocean technologies in general—have come a long way since the bean can. We now have Artificial Intelligence (AI)-enabled robots that not only allow researchers to access the most remote spots in the ocean, but can decide where to explore once they get there. New types of underwater vehicles mimic the weird and exotic animals they’re studying in the ocean twilight zone, a shadowy layer just beneath the sunlit surface between 200 to 1,000 meters. And aerial drones measure whales and seals in their natural habitats without scientists ever having to touch them.

It may seem the future of ocean technology is already here, but according to WHOI chief technology strategist Chuck Sears, we’ve only scratched the surface.

“Right now, we’re on the cusp of a number of fundamental technological breakthroughs that will be game-changing for oceanography,” says Sears. “Decades from now, I don’t think the ocean technology landscape will look anything like it does today.”

Always on, always connected

The sun was slowly sinking over Boston’s Charles River on an early June evening in 2019, when a trio of tiny ocean robots was unleashed into the water. As darkness set in, the robots began cruising the river. Unlike most ocean robots that run their missions independent of one another, these robots were using acoustic communications to explore together.

“We put strobe lights on the robots so we could see them moving in sync through the water—it was pretty amazing to watch,” says WHOI engineer Erin Fischell, who is on her own mission to replace larger and expensive underwater robots with swarms of smaller robots that work cooperatively. The goal, she says, is to get a fleet of tiny robots deployed for less cost than one or two larger and more complex robots.

WHOI biologist Tim Shank says this concept fits squarely into deep-sea exploration—particularly in the Hadal Zone, the deepest part of the ocean reaching 11,000 meters. With a total area roughly five times the state of Texas, the Hadal Zone is considered the least explored place on Earth.

Shank wants to change that. He, along with WHOI lead engineer Casey Machado and NASA’s Jet Propulsion Laboratory, has developed a bright-orange ocean robot named Orpheus—the first in a new class of lightweight, low-cost autonomous underwater vehicles (AUVs). Orpheus can withstand the pressure of the ocean’s greatest depths, and can explore independently or as a networked “fleet.”

“Orpheus is a key component of our HADEX hadal exploration program,” says Shank. “In the future, I can envision twenty or more of these low-cost robots exploring hadal trenches cooperatively.”

This concept feeds into a long-range vision for what WHOI senior scientist Dennis McGillicuddy calls a networked ocean. He says the digital ocean ecosystem of the future will rely on an integrated network of underwater vehicles, sensors, and communications systems that will cover the ocean in an “always on, always connected” way.

“A networked ocean will connect individual vehicles and instruments, and provide real-time information about what’s happening in the ocean, much like the National Weather Service,” says McGillicuddy.

Heterogenous “swarms” of robots are in the mix, but other advances round out the picture. McGillicuddy says ocean observing systems like the Pioneer array, which tracks the shelf break front south of New England, and the OSNAP array, which tracks Atlantic Ocean circulation, will span the global ocean and beam data back to scientists via acoustic, optical, and satellite communications. There will also be a trend towards “data mule” type vehicles on the surface that receive data from AUVs below and beam the information up to satellites to transmit to laboratories on shore. And, fixed docking stations will be deployed in the open ocean that allow ocean vehicles to offload data and power up before heading to their next exploration site.

“Decades from now, I don’t think the ocean technology landscape will look anything like it does today.”

—Chuck Sears, WHOI chief technology strategist

A new nerve center

If a networked ocean is the brain stem, sensors are the cranial nerves.

Today’s ocean sensors detect things in the water that humans can’t, giving scientists a fuller picture of ocean phenomena, whether it be the speed and direction of ocean currents, changes in seawater chemistry, carbon cycling, or biological productivity in the deep sea.

Yet according to Jim Bellingham, director of WHOI’s Center for Marine Robotics, the oceanographic community is “massively underinvested” in sensors. The proprietary “one-off” design of certain sensors can drive costs through the roof, making it difficult or impossible for scientists to deploy quantities of them in the ocean.

Another issue is size—some sensors are so large and bulky that they can only be transported by large remotely operated vehicles (ROVs), or towed by a ship.

Bellingham says future ocean sensors will become increasingly compact and affordable as they take advantage of smaller and more powerful microprocessors being developed for consumer electronics.

“You can make highly capable things that are small and low-cost, as long as you can scale out,” says Bellingham.

Sears shares a similar view. He says ocean technologies in general have often been “exquisite and available to very few,” but feels electronics will become significantly smaller, cheaper, and more capable.

“Eventually, it will become possible to create entire 3D ocean imaging systems that are smaller than a dime,” he says.

That’s a far cry from some of the hefty sensors of today, like Environmental Sample Processors (ESPs) used to study harmful algal blooms. ESPs rival the size of punching bags, but thanks to smaller components, the latest generation is about half the size and deployable on a wider range of vehicles.

“Today, we can create maps of the seafloor with amazing clarity,” says WHOI scientist Adam Soule. “But the problem is, only a very small portion of the seafloor is mapped.”

—Adam Soule, WHOI scientist

Rather than relying on just a single, larger, and more expensive underwater robot to cover an area of the ocean, ocean scientists hope to leverage hundreds or even thousands of smaller, lower-cost robots all working in sync as depicted here. (Illustration by Tim Silva, WHOI Creative, © Woods Hole Oceanographic Institution)

Remote ocean sensing also stands to benefit from smaller and relatively low-cost technology. WHOI engineer Paul Fucile sees a trend towards the increased use of CubeSats—ocean sensing microsatellites smaller than a shoebox—for taking temperature, color, and salinity measurements from space.

“A CubeSat can be extremely useful for oceanography,” says Fucile. “Much the way that gliders emerged in scientific use some 20 years ago and are quite common in the community today, CubeSats have the capability to provide an investigator with an economically-customized and dynamic sampling tool that can go from conception to launch in as little as 2-3 years.”

The future will also see new types of sensors for studying ocean biology, says Andy Bowen, director of WHOI’s National Deep Submergence Facility. In particular, sensors that can shed light on life in that shadowy ocean twilight zone.

“We know so little about this vast area of the ocean, so there’s a huge push—and a huge challenge—to develop new sensing solutions,” he says.

This will include high-sensitivity light sensors that measure light levels in the twilight zone. Bowen says these sensors will help scientists understand how solar radiation drives the daily migration of mesopelagic animals to the surface to feed. And, new sensors for measuring environmental DNA (eDNA)—the genetic traces organisms leave behind as they move through the water—will help scientists track which organisms live in the twilight zone, and identify previously unknown species.

Expanding the view

Ocean scientists today are getting unprecedented glimpses below the surface, thanks to advances in high-definition camera and lighting systems, multi-beam sonar, lasers, satellites, and other imaging technologies. Today, 4K resolution cameras are used to spy on seal-prey interactions in fishing nets near the surface, underwater video microscopes image plankton and other organisms in the ocean’s midwater, and stereo machine vision camera systems document hydrothermal vents in the deep.

But despite the tremendous strides made in imaging technology, researchers want to see more.

“Today, we can create maps of the seafloor with amazing clarity,” says WHOI marine geologist Adam Soule. “But the problem is, only a very small portion is mapped.”

Estimates suggest that less than 20% of the world’s ocean floor has been mapped, which pales in comparison to our topographic understanding of the Moon. This is a problem. Getting a good read on the seafloor is essential to understanding ocean circulation and its effects on climate, tides, and underwater geo-hazards.

Soule says new sonar imaging tools will be needed to facilitate a complete mapping of the ocean floor—a lofty goal of the project known as Seabed 2030.

SINGLE BLADE

WHOI engineer Robin Littlefield carries his miniature Single Blade submersible in Woods Hole, Mass. (Photo by Kalina Grabb, © Woods Hole Oceanographic Institution)

As ocean sensors continue to shrink in size, they’ll become more suitable for use on smaller submersibles like Single Blade. Driven by a single-bladed propeller and a lone tiny motor, it maneuvers through the ocean without fins, actuators, or additional thrusters. According to WHOI engineer Robin Littlefield, who designed Single Blade with Jeff Kaeli, Fred Jaffre, and Ryan Govostes, it takes the simplicity of autonomous underwater vehicles (AUVs) to a whole new level.

“We are very excited about this technology because it dramatically simplifies the hardware needed to propel and control an AUV. This makes for a more compact and reliable means of propulsion and opens up new possibilities for exploration,” Littlefield says.

Illustrations by Natalie Renier, WHOI Creative, © Woods Hole Oceanographic Institution

“One idea is to build barges that are essentially huge floating sonar systems,” says Soule. “This will give us a much bigger array to work with and cover more of the seabed in less time.”

Bellingham also feels that new tech approaches to mapping are in order, but sees the potential for smaller-scale solutions.

“Today, we use large physical apertures on our sonar systems,” he says. “That means our vehicles have to be big enough to accommodate them. In the future, I can see things evolving to the use of synthetic apertures, which can perform the same functions computationally and require far less real estate. This would open up the range of vehicles we could use for seafloor mapping.”

Deep-sea imaging is another area where the tech landscape will continue to evolve, according to WHOI marine geologist Dan Fornari, who has helped pioneer a number of deep-sea imaging systems. Specifically, he sees promise in 3D imaging technologies, an environment that is often visually noisy.

“When you go into the deep ocean, the visibility can be very poor due to all the particulates floating around and turbulence from currents,” says Fornari. “Even with the best cameras and lights, it can be like pea soup.”

He says 3D imaging could help in these poor-visibility environments by creating fine-scale, physical representations of the features that oceanographers are trying to study. One idea he’s discussed with HOV Alvin vehicle managers is populating the sub with a half-dozen cameras for a 360-degree, virtual reality-like view of the terrain as Alvin moves along.

“If we get a really good handle on the physical settings and their structures in three-dimensional space, we can dive more into the biological, chemical, and other process-oriented phenomena happening in the deep in ways not possible in the past,” says Fornari.

Advances in high-definition cameras, lighting systems, and other imaging gear provide scientists with unprecedented glimpses of marine life in the deep sea, like the zoarcid fish and tubeworms at the hydrothermalvent shown here. (Photo by P. Gregg, University of Illinois at Urbana-Champaign/NSF/HOV Alvin 2018 © Woods Hole Oceanographic Institution)

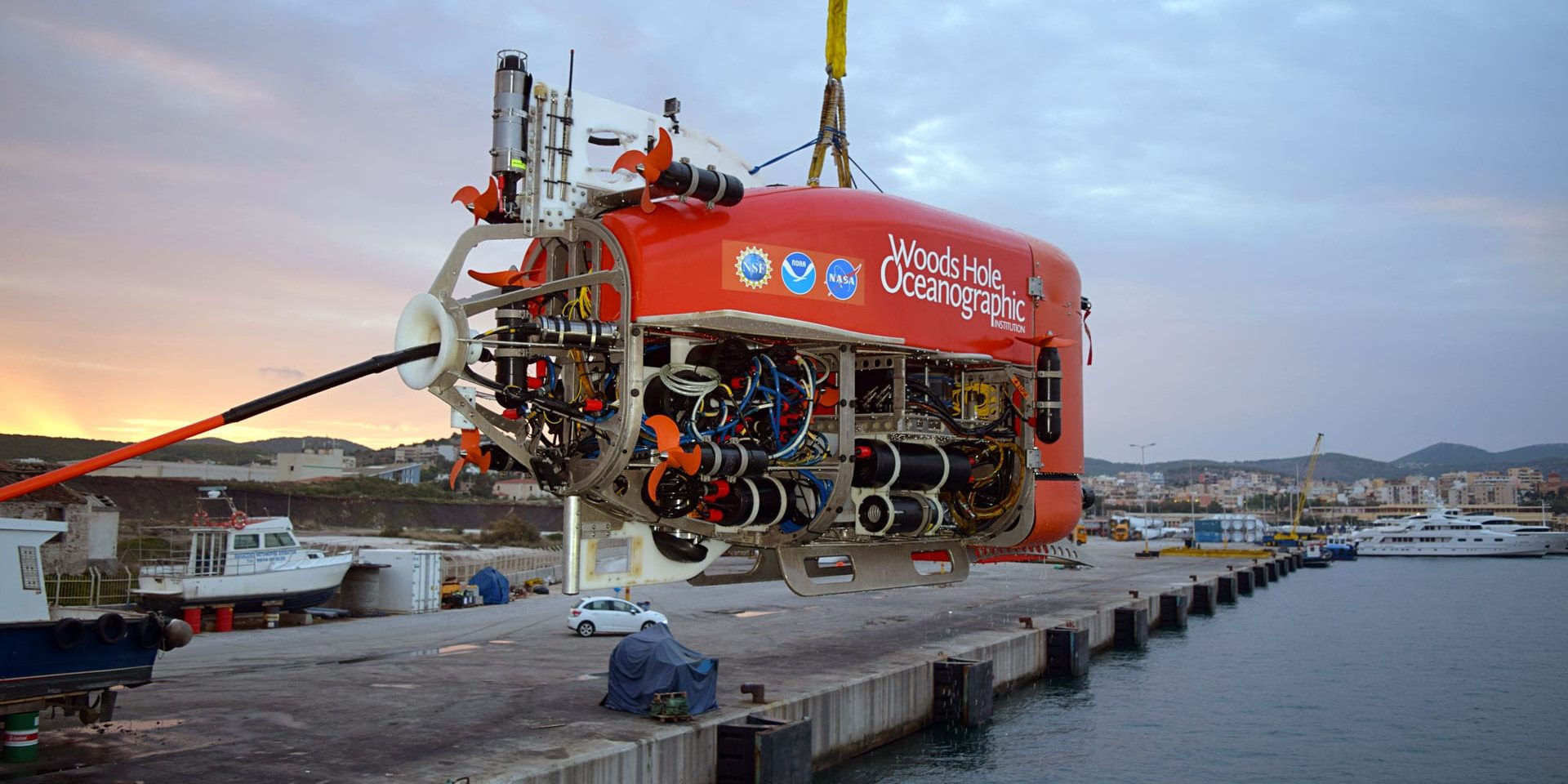

An unmanned future

The ROV Nereid Under Ice (NUI) hovered gently a few feet above thick, colorful carpets of microbes stretched along the mineral-rich seafloor. The robot, about the size of a Smart Car, was navigating the dark and dangerous world of Kolumbo Volcano, an active submarine volcano off Santorini Island, Greece.

Through a bubbling fog of CO₂ gushing from a nearby hydrothermal vent, the robot’s vision cameras locked-in on a patch of sediment at the base of a hydrothermal vent. Moments later—without the aid of an ROV pilot—a slurp-sample hose attached to the robotic arm extended down to the precise sample location and sucked up a bit of dirt. It was the first known automated sample taken by a robot in the ocean.

The field test, in November, 2019, was a significant step in the evolution of autonomous

ocean robots. It shifts the playing field from standard autonomous vehicles—which rely on scripted mission programs—to fully autonomous vehicles that use AI-based tools to decide where to go and how to move.

According to WHOI scientist Rich Camilli, this level of autonomy will become more important as robots are called on to explore deeper and more extreme parts of the ocean. In particular, the ability for a vehicle to balance possible scientific gain with safety concerns will be key.

“Sending a robot into these kinds of environments can be like telling someone to hang glide through mid-town Manhattan in a heavy fog,” he says.

But vehicle survivability is just one consideration. Underwater vehicles will also need fuller autonomy for decision making. During the Kolumbo expedition, an automated planning tool named ‘Spock’ gave NUI the ability to decide which areas of the volcano to explore.

“All the sites Spock took us to turned out to be outstanding, scientifically-relevant sites,” says Camilli.

It’s no coincidence that Spock is being developed as part of a NASA-funded program (called the Planetary Science and Technology from Analog Research interdisciplinary research program, or PSTAR, for short). If a robot needs to reason its way through Earth’s ocean, it will really need such skills to resolve unanswered questions on ocean worlds elsewhere in our solar system.

“We don’t want a vehicle on a distant ocean world waiting for us to tell it what to do,” says WHOI senior scientist Chris German, who has also been working with NASA’s Planetary Science Division on technology development. “It would take hours, not minutes, to get a message to a robot in space based on the speed of light.”

German has had his eye on other ocean worlds ever since the presence of ice-covered liquid oceans were confirmed on Jupiter’s moons Europa and Ganymede and, subsequently, Saturn’s moons Enceladus and Titan. He says that plans are underway for testing new autonomous capabilities of WHOI’s ROV Sentry.

“We’re going to have Sentry on the seafloor interpreting data on the fly and making its own decisions as to what’s scientifically interesting,” says German. “Then, it will send us a message indicating what features it found interesting and why, along with information about where it explored, what it looked at, and how it searched.”

Loral O’Hara, an adjunct oceanographer at WHOI who recently became a NASA astronaut, says that the engineering needed to develop ocean vehicles and spacecraft can be very different. But when it comes to technologies and methods required to detect life in our own ocean—or on other planets—there may be common threads.

“Searching for life when you don’t really know what you’re searching for is really exciting, and is one of the challenges we face both here on Earth and on other planets,” she says. “So, we’re working on instruments and system architectures that will allow us to do that in very diverse environments.”

“On other planets, we’ll be looking for life, but we don’t really know what we’re looking for,” says O’Hara. “So that creates a lot of challenges: how do we detect life, what sensors do we need, etc. It’s mindboggling, but that’s the really exciting part.”

The virtual ocean

Gains in robot intelligence will undoubtedly lead to more ocean monitoring and sampling, particularly in areas that have been too dangerous or remote for oceanographers. But sampling the ocean is only one part of the equation. In order to make accurate predictions of long-term changes, ocean scientists will need to rely on a completely different technology used above the surface: computer models.

“Models are incredibly good at forecasting future changes,” says Mara Freilich, an MIT-WHOI Joint Program student who studies how the ocean affects global climate and the cycling of nitrogen and other nutrients. “But the technology we have today could improve in terms of how they simulate certain things.”

She says that some small-scale ocean currents that are relevant to her studies cannot be simulated well due to limitations in pixel resolution. Climate models typically represent vast swaths of virtual ocean in a pixelated grid of uniform boxes, with each box spanning areas of tens of kilometers or more. This gives a broad spatial view of the environment as a whole, but doesn’t provide enough resolution to represent smaller scales. But she expects that as computing power increases, models will overcome these resolution constraints and become more adept at resolving ever-smaller ocean processes.

Another factor that could help fine-tune ocean models is more hard data. “Improving our fundamental understanding of ocean processes through real-world observations will allow us to better represent them mathematically in the computer code,” says Freilich.

MIT-WHOI Joint Program student Mara Freilich demonstrates how a computer model, known as the Process Study Ocean Model (PSOM), simulates swirling ocean currents called eddies. (Photo courtesy of Troy Sankey)

WHOI physical oceanographer Carol Anne Clayson also feels that more observation data is key to optimizing models. She has an eye on what she refers to as “Super Sites”—floating instrument platforms that measure a broad range of parameters in the ocean and the atmosphere.

“Typically, when we have a permanent monitoring site in the ocean, we’re only measuring a few things,” says Clayson. “The idea here is to measure everything we can in a relatively small area for a few years at a time—from biogeochemical and physical processes underwater to turbulence in the atmosphere—so we can provide more comprehensive statistics that higher-resolution models will need.”

Freilich says machine learning—a form of AI that enables systems to learn from data—could make models more capable by discovering patterns in hard data to understand or “learn” how particular ocean processes work. These patterns can then be incorporated into high-resolution models to help make predications.

WHOI physical oceanographer Young-Oh Kwon agrees. He, too, uses computer models to simulate ocean circulation systems, and says the use of machine learning to enhance models looks promising.

“Many in the community talk about the potential for machine learning to connect observation data and models, and figure out where models can be improved upon based on data collected in the ocean,” he says. “That’s a very exciting area.”

Science into action

In a 1977 research paper, physical oceanographer Walter Munk—often referred to as the “Einstein of the ocean”—commented on the lack of samples collected by the oceanographic community prior to the 1960s. “Probing the ocean from a few isolated research vessels has always been a marginal undertaking, and the first hundred years of oceanography could well be called ‘a century of under-sampling,’” he wrote.

“A networked ocean will connect individual vehicles and instruments, and provide real-time information about what’s happening in the ocean, much like the National Weather Service.” ~ Dennis McGillicuddy, WHOI senior scientist

WHOI deep-sea biologist Taylor Heyl (in foreground) explores Lydonia Canyon in the OceanX submersible Nadir during a dive in the Northeast Canyons and Seamounts National Monument. (Photo by Luis Lamar for National Geographic)

One hundred years from now, some may call what we’ll be doing “over-sampling”—particularly if advances in networked oceans, sensors, underwater imaging, and decision-making robots are brought to bear. Collectively, these innovations should yield dramatic shifts in our understanding of the global ocean.

Bellingham puts it simply: “When you extend the reach of your tools, you learn more about the world around you.”

Beyond a better understanding of the ocean, however, new tech will allow scientists to put real-time information into the hands of policy makers, resource managers, and others who can use it to plan sustainable uses of the ocean, adapt to changing ocean conditions, and create better governance and accountability over its use.

“How and where the ocean is managed in the future depends heavily on the technology advances to come,” says Bellingham.

The human connection

While technological advances will propel ocean science forward, Soule points out that human exploration will play a key role well into the future. It will still be vital, he says, for scientists to go to sea and immerse themselves in underwater environments in order to see what’s down there, interpret it, and make decisions.

“You still need the creativity of people to make sense of it all,” he says.

McGillicuddy echoes the sentiment. He notes that while things like networked oceans and fully autonomous robots will play exciting and important roles in our future ocean, past experience shows that some of our greatest discoveries come from human beings who are present at sea and able to recognize the unexpected—properties and phenomena—for which we don’t have autonomous sensors.

“The future will be woven from a mixture of modern technology and ocean scientists who take water samples from the ocean and look at them the old-fashioned way,” he says. “We’re not giving up on bean cans just yet.”

The remotely operated vehicle (ROV) Jason

is captured by the MISO GoPro 12MP digital camera developed by WHOI scientist Dan Fornari. The system combines specialized optics that correct for visual distortion underwater, ~20 hours of battery life, and a pressure-resistant housing designed for ocean depths of 6,000 meters (19,685 feet). (Photo courtesy of Dan Fornari, © Woods Hole Oceanographic Institution, and Rebecca Carey, Univ. of Tasmania/NSF/WHOI-MISO)